Eric Snyder, a technologist leading innovation at the University of Rochester’s Wilmot Cancer Institute, warned that healthcare’s AI ambitions are undermined by “Innovation Theater” and cultural paralysis.

He argued the root cause of the industry’s data crisis is a personnel issue, criticizing the practice of placing clinicians or MBAs, rather than developers and architects, in top technology roles.

Snyder offered a pragmatic alternative, solving complex data requests in under 24 hours and deploying a secure on-prem LLM in a single day, proving that simple solutions exist when the right people are empowered.

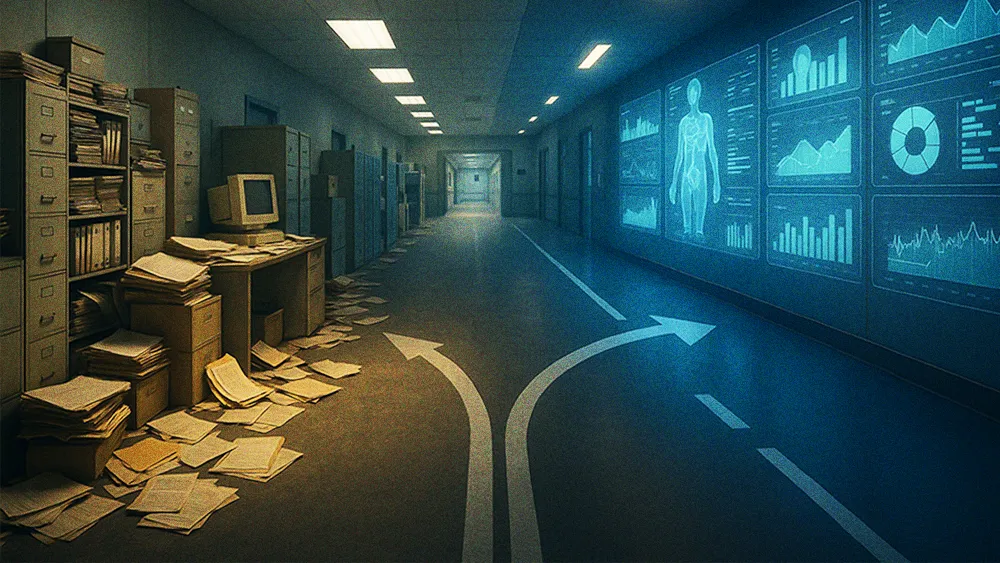

While the healthcare industry is fixated on unlocking immediate ROI with AI, some technology leaders are calling out the data health risks lying beneath the surface. Deploying AI-powered solutions atop an unstable foundation of sticks in the form of dangerously flawed underlying data. It’s often more than a mere technical misstep; it’s a sign of the industry “going the wrong way” entirely.

Eric Snyder is the Executive Director of Technology & Innovation at the University of Rochester’s Wilmot Cancer Institute, where his team’s work captured national attention winning a Digital Health Award last year. Snyder occupies a unique position. He leads an “embedded innovation team that doesn’t report through the normal structures,” giving him the autonomy to set his own technical vision and innovate with multi-modal technologies from AI to VR/AR and spatial awareness tech.

Unlike many healthcare tech leaders who are MBAs or clinicians, Snyder is a developer and architect by trade. He brings a technologist’s first-principles approach to an industry he believes is too often stuck in its old habits: “If we aren’t taking care of the data first, then the AI is never going to be as great as it could be.”

To understand Snyder’s warning, you have to grasp the scale of healthcare’s data crisis. The problems are not minor bugs; they are foundational flaws in the system’s DNA. It begins with a simple, devastating fact.

A house of cards: “In healthcare, there is no standard definition for anything,” Snyder stated. “Even within our own institutions, you don’t have the same definitions for certain things, like readmissions. The state defines it differently than a clinical person would.” This definitional chaos is compounded by poor data quality. According to Snyder, the data flowing from Electronic Health Records is, on average, 20 to 30% wrong—a catastrophic error rate when training AI models. The problem is made worse by the industry’s reliance on payer data, which is often incomplete and unrepresentative of the actual patient population, a trend that runs counter to the broader push for digital and AI transformation. “Training AI models on data that isn’t clean is extremely dangerous,” he warned.

If the data problems are so severe, why isn’t the industry fixing them? Snyder argued the issue isn’t technical, but cultural. The real-world consequences of this inaction were laid bare during the COVID-19 pandemic, when New York state “had no way to really share data amongst hospitals” to effectively manage the crisis. The root of this paralysis, he contended, is a personnel problem.

Right job; wrong person: “You would never want me to be a doctor just because I can lean that way high level,” Snyder said, offering a sharp analogy. “Why would the opposite hold true? You would never do that in any other industry.” He argued that healthcare consistently makes this error, placing MBAs and clinicians in technology leadership roles instead of seasoned technologists who understand systems from the ground up.

A new way of thinking: Snyder’s team offers a different model. By borrowing principles from cybersecurity, they have started from scratch to solve the data problem internally. “We changed the way to think about how the data was coming in,” he explained. “We need to redefine all these things into more manageable chunks.” The result is a system so efficient that his team can fulfill a basic data report request in under 24 hours—a task that takes weeks or months at other institutions. This isn’t a new software; it’s a new mindset. “We simply have to think differently,” he urged.

The final, frustrating piece of the puzzle is what Snyder described as a pervasive cultural resistance to pragmatic solutions, a phenomenon he calls “Innovation Theater.” He described leaders at conferences “parroting the same thing” about AI without any real technical depth, driven by a fear of being left behind. This performance of progress masks a deep-seated institutional paralysis. He offered a potent example: the industry-wide hand-wringing over the security of LLMs. While others debate the risks of the cloud, Snyder’s team found a simple localized fix.

Pragmatism over paralysis: “If you’re truly fearful of the security of it, just put it on-prem,” he said. “Everyone’s buying an LLM in the cloud, but that doesn’t always make sense for every scenario either. It took our team a day to stand up an LLM on-prem. You don’t necessarily have to pay the cloud to have great models. The security concern is now localized, the same as every other app.”